When describing the process of disruptive innovation, Clay Christensen set about to also describe the process by which a technology is developed by visionaries in a commercially unsuccessful way. He called it cramming.

Cramming is a process of trying to make a not-yet-good-enough technology great without allowing it to be bad. In other words, it’s taking an ambitious goal and aiming at it with vast resources of time and money without allowing the mundane trial and error experimentation in business models.

To illustrate cramming I borrowed his story of how the transistor was embraced by incumbents in the US vs. entrants in Japan and how that led to the downfall of the US consumer electronics industry.

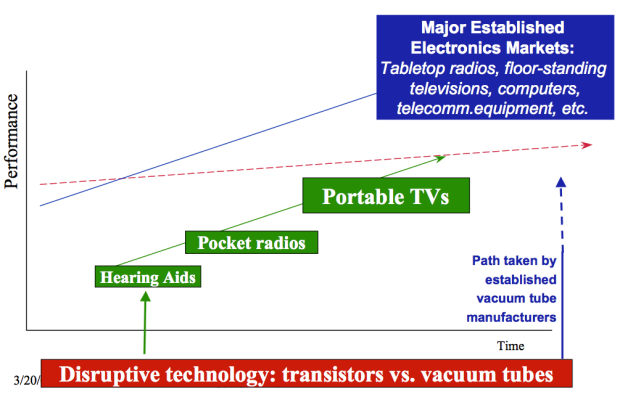

Small upstarts were able to take the invention, wrap a new business model around it that motivated the current players to ignore or flee their entry. They thus successfully displaced the entrenched incumbents even though the incumbents were investing heavily in the technology and the entrants weren’t.

In the image below, the blue “path taken by established vacuum tube manufacturers” is the cramming approach vs. the green entry by outsiders who worked on minor new products which could make use of the rough state of transistors at their early stages of development.

The history of investment in transistor-based electronics shows how following the money (i.e. R&D) did not lead to value creation, quite the opposite. There are many such examples: The billions spent on R&D by Microsoft did not help them build a mobile future and the billions spent on R&D by Nokia did not help them build a computing future.

There are other white elephant stories such as IBM’s investment in speech recognition to replace word processing, the Japanese government spending on “Fifth Generation Computing” and almost all research into machine translation and learning from the 1960s to the present.

But today we hear about initiatives such as package delivery drones and driverless cars and robots and Hyperloops and are hopeful. Perhaps under the guiding vision of the wisest, most benevolent business wizards, breakthrough technologies and new infrastructures can finally be realized and we can gain the growth and wealth that we deserve but are so sorely lacking.

But the failure of crammed technologies isn’t rooted in a lack of wisdom. It was the wisest of minds which foresaw machine learning, advanced computing, mobility and convergence coming decades before they came. It was their wisdom which convinced others that resources should be spent on these initiatives. And it was the concentrated mind power of thousands of scientists which spent hundreds of billions in academic and government research.

What failed wasn’t the vision but the timing and the absence of a refinement process. Technologies which succeed commercially are not “moonshots.”1 They come from a grinding, laborious process of iteration and discovery long after the technology is invented.

The technology is one part of the problem to be solved, the other is how to get people to use it. And that problem is rooted in understanding the jobs people have to get done and how the technology can be used defensibly. That’s where the rub is. An unused technology is a tragic failure. Not just because it has no value but because the resources (those beautiful minds) used in making it could have been applied elsewhere.

Building usage means imparting and retrieving learning through a conversation with the customer. That conversation is best spoken with a vocabulary of sales and profits. Without profits the value is unclear. Nothing is proven. Without economic performance data, the decision of resource allocation becomes a battle of egos.2 The decision may be right but going by past history the odds are low.

Incidentally, timing is the other element that is key to success. It might seem that timing really is a matter of luck. But timing can be informed by the same conversation with the customer. As you observe adoption you can also measure how long it take for a technology to be adopted. You can do A/B tests and see what is faster.

The most reliable method of breakthrough creation is not the moonshot but a learning process that involves steady iteration. Small but profitable wins. A driver-less car might be achieved but first a driver-assisting car might teach the right lessons. An electric car might be achieved but first a hybrid car might teach the lessons needed. A delivery drone might be achieved but first a programmable UPS truck might be a better way to learn.

And finally, Android (née Linux) in 2005 might have been foreseen as the future of mobile operating systems but it took the learning from iOS to shape it into a consumer-friendly product. Even when you see a moonshot work, you realize that a lot of learning had to have taken place. It’s like the story of an overnight success that took a lifetime of perseverance.

- It could be said that the space and nuclear weapons programs during the Cold War were the original “moonshots” and they succeeded. But although successful in their goals, those goals were not commercial value creation and we are left with little to show for it. See also Concorde. [↩]

- This is how most research is allocated today. [↩]

Discover more from Asymco

Subscribe to get the latest posts sent to your email.